Transcribe Audio File

Transcribe audio or video files into text

How to Configure the Transcription Step

When configuring a Transcription Step, there are two main pieces to consider:

Selecting the best transcription model for your use-case

How to pass your audio file to AirOps

Once you're ready to get started, you can click the "Configure" button of your Transcription Step to set these values.

Transcription Model

AirOps offers 5 transcription models at this time:

Deepgram Whisper Large: fast, reliable transcription that includes built-in diarization (speaker identification). With the ability to auto detect language or set the language code

Deepgram Nova: the fastest model to-date

Deepgram Nova 2: provides the best overall value

Deepgram Enhanced: higher accuracy and better word recognition. With the ability to auto detect language or set the language code

AssemblyAI: With the ability to select the number of speakers expected in a transcript, AssemblyAI is an excellent choice for diarization

Keep in mind: Deepgram has a 2GB file size limit and AssemblyAI has a 5GB file size limit

Adding Your File into AirOps

There are currently two methods for passing your audio or video files into AirOps.

Option #1: Upload via the AirOps UI

In the

Start Stepof your Workflow, define your Workflow Input as "File Media"Add the input as the

File to transcribe

Option #2: Upload via Google Drive

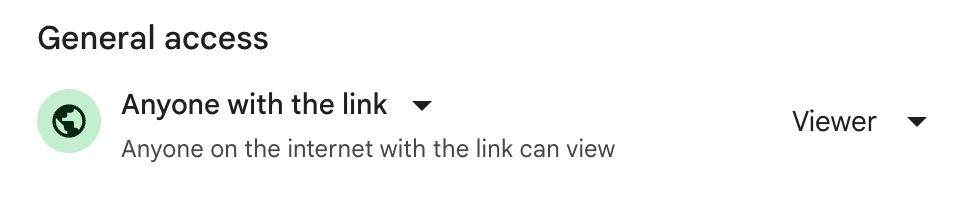

Within Google Drive, configure your audio or video file so that "Anyone with the link" can view:

Add an input with the variable name

google_drive_linkAdd a code step with the following Javascript to convert the shareable URL from Google Drive into a downloadable URL:

Add the output of the code step as the

File to transcribe

Multiple Speakers?

If selected, the model will automatically detect multiple speakers. This will result in the following outputs from the model.

Speaker 0:

Speaker 1:

Speaker 0:

Speaker A:

Speaker B:

Speaker A:

Detect Language?

Check to automatically detect the language of the file

Language

If Detect Language? is unchecked, you can specify the language you want to detect.

Not all models support multiple languages. Check out the documentation of each model below to determine which languages are supported

Last updated

Was this helpful?