BigQuery

Connect to a BigQuery database in AirOps

Setup Google Cloud Platform Service Account and Grant Access (for New Users)

To add a Google BigQuery Data Warehouse as a Data Source on AirOps, use a Google Cloud Platform service account with read access to the desired datasets and tables:

1. Create a Google Cloud Platform Service Account

Go to the Google Cloud Platform Console.

Click on the project dropdown and select the project that contains your BigQuery dataset.

Navigate to the IAM & Admin page, and then click Service accounts.

Click + CREATE SERVICE ACCOUNT at the top of the page.

Enter a name for your service account and click Create.

On the Grant this service account access to the project page, select the BigQuery Data Viewer and BigQuery Job User roles.

Click Continue, and then click Done.

2. Generate a JSON key for the Service Account

In the Service accounts page, find the service account you just created.

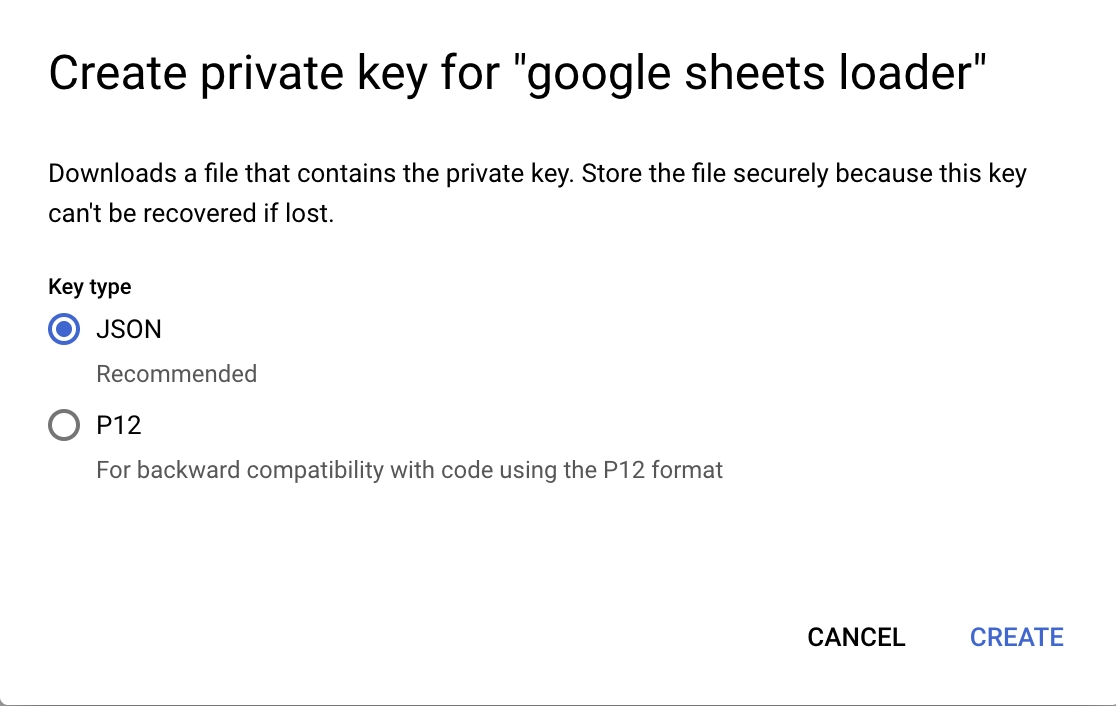

Click the Actions menu (three vertical dots) and select Create key.

Select JSON as the key type, and click Create.

Save the generated JSON key file to your computer.

3. Enter the JSON Key File Content in AirOps

Open the JSON key file with a text editor.

Copy the entire content of the JSON key file.

In AirOps, paste the JSON key file content into the JSON Key field.

Now, your Google BigQuery Data Warehouse should be connected and ready for use within AirOps.

Last updated

Was this helpful?